Chapter 5: Memory Management

5.1 Memory management

Instructions must be in main memory in order to be

fetched and executed by a processor. Any data

operand referenced by an instruction must be in

main memory for the instructions to exec

successfully.

main memory = volatile => power failure:

information stored is lost

disk storage: non-volatile; much system design

effort is concerned with writing sufficient

information safely out to disk to guard against loss

of main memory on a crash.

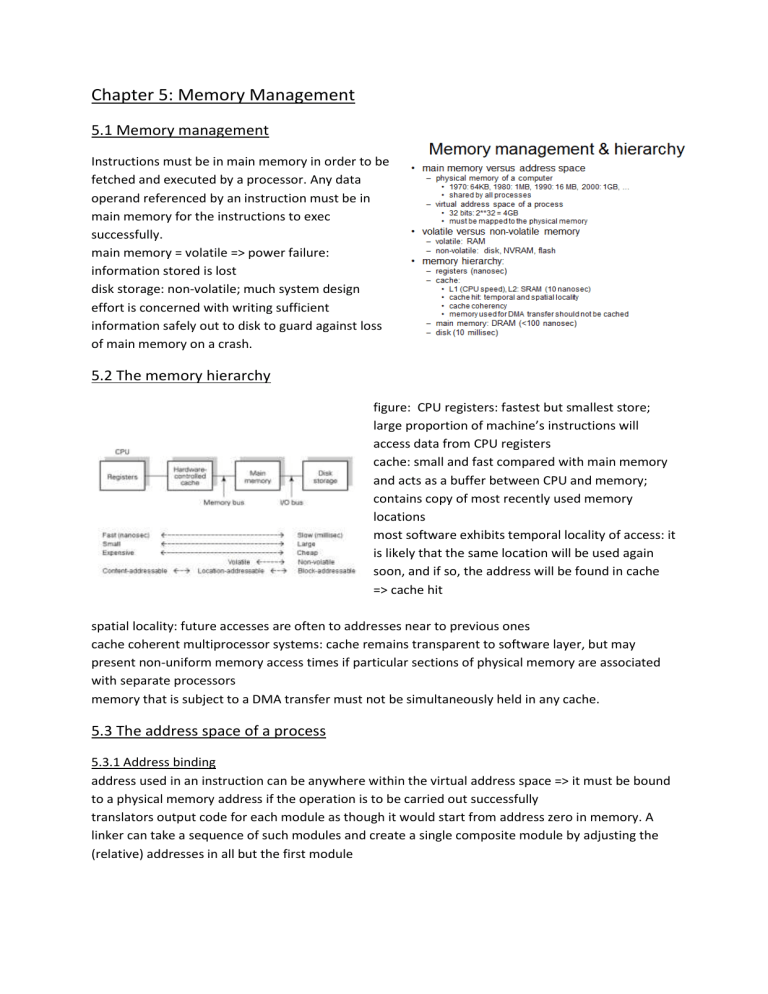

5.2 The memory hierarchy

figure: CPU registers: fastest but smallest store;

large proportion of machine’s instructions will

access data from CPU registers

cache: small and fast compared with main memory

and acts as a buffer between CPU and memory;

contains copy of most recently used memory

locations

most software exhibits temporal locality of access: it

is likely that the same location will be used again

soon, and if so, the address will be found in cache

=> cache hit

spatial locality: future accesses are often to addresses near to previous ones

cache coherent multiprocessor systems: cache remains transparent to software layer, but may

present non-uniform memory access times if particular sections of physical memory are associated

with separate processors

memory that is subject to a DMA transfer must not be simultaneously held in any cache.

5.3 The address space of a process

5.3.1 Address binding

address used in an instruction can be anywhere within the virtual address space => it must be bound

to a physical memory address if the operation is to be carried out successfully

translators output code for each module as though it would start from address zero in memory. A

linker can take a sequence of such modules and create a single composite module by adjusting the

(relative) addresses in all but the first module

system structure is extended to

include one way of performing

address translation. The PC will

contain a virtual address which must

be translated to a real address before

an instruction is fetched

1 – instructie werkt altijd op register van CPU

2 – er wordt vanuit gegaan dat er op adres nul begonnen

wordt, maar dat is niet altijd zo (virtueel), er lopen nog

andere processen i/h virtueel geheugen

3 – loader moet virtuele adressen omzetten in concrete,

fysieke adressen (15 => 415)

5.3.2 Static binding

OS will give loader a base address from which to load the module, having identified a region of

physical memory that is sufficiently large to contain the program

should the loader adjust all relative addresses in the module, converting them to absolute physical

addresses before loading it? => static binding

if this was done, then once a program was loaded into memory the addresses in it would be fixed

and the code or data at these addresses in it would be fixed and the code or data at these addresses

could not be moved in memory without further relocation

5.3.3 Dynamic binding

a given program can run anywhere in physical

memory and can be moved around by the OS; all

of the addresses that it is using are relative to its

own virtual address space and so it is unaware of

the physical locations at which it happens to have

been placed;

it might be possible to protect processes from

each other and the OS from app processes by

whatever mechanism we employ for isolating the

addresses seen by processes

bind the virtual addresses to physical addresses when the instructions are executed

figure: the process sees an environment in which it uses addresses starting from zero. The real

machine is shared by a number of processes, and we see the virtual memory of the process

occupying a portion of real physical memory. The way in which the virtual address space is mapped

to the physical memory must therefore be changed each time the OS switches from one process to

another

5.3.4 Hardware-assisted relocation and protection

physical address of this first location is base

of process. Suppose an instruction is

fetched and decoded and contains address

reference => reference is relative to base,

so the value of the base must be added to it

in order to obtain the correct physical

address. Simplest form of dynamic

relocation hardware is a single base

register and a memory mgmt. unit (MMU)

to perform the translation. The OS must

load the base register as part of setting up the state of a process before passing control to it.

=> does not provide any protection between processes. Natural to combine relocation and

protection in a single set of registers by introducing a second register: limit register , that delimits

the upper bound of the program => figure: typical instruction exec cycle augmented with protection

checks and address relocation

(base & limit: tussen die grenzen wordt gewerkt, daar moet instructive zitten; moet hardware-matig

gebeuren)

5.4 Segmented virtual memory

(virtueel geheugen opdelen in stukken, verfijnde protectie mogelijk => vb. data kan je niet uitvoeren)

1

2

1 – two processes sharing a compiler

2 – relocation hardware to realize scheme 1

virtual address space of the processes: the most significant bit of an address is now taken as a

segment identifier with 0 indicating the data segment (segment 0) and 1 indicating the code segment

(segment 1). If this bit is 0 then base register 0 is used for relocation, same for 1

the system might support separate read, write and execute rights

(2 – 1 bit gebruikt om segmenten aan te duiden => 2 segmenten => 2*2^31 = 2^32 = 4 gig; als je 4

bits gebruikt: 16 segmenten van 256 mb => hoe meer bits je gebruikt om het segment aan te duiden,

hoe kleiner de segmenten maar het total van virtueel geheugen blijft gelijk. Segment kan niet groter

zijn dan fysiek geheugen.

niet alle segmenten van een proces moeten zich in het geheugen bevinden. Als proces dan verwijst

naar segment met virtueel adres dat nog niet in fysiek geheugen zit, dan kan proces niet verder =>

segment moet eerst worden ingeladen en dan wordt proces weer running (addressing exception)

separate areas for code and data, for example,

code, stack and heap. Language systems have

conventions on how the virtual address space is

arranged and a common scheme is shown in the

figure. We see a code segment, which will not

grow in size, followed by a data area which may

well grow. At the top of the virtual address space

we see the stack growing downwards in memory.

The segment is the unit of protection and sharing,

and the more we have, the more flexible we can

be.

virtual address is split into a segment

number and a byte offset within the

segment.

Advantages of segmented virtual memory:

* virtual address space of a process is divided into logically distinct units which correspond to

the constituent parts of a process

* segments are the natural units of access control, that is, the process may have different

access rights for different segments

* segments are the natural units for sharing code and data objects with other processes

disadvantages:

* inconvenient for the OS to manage storage allocation for variable-sized segments

*after the system has been running for a while the free memory can become fragmented =>

external fragmentation – it might be that there is no single area large enough to hold some

segment

* limited segment size: restricted by physical memory and y bits

* hardware support needed

*swapping may result in severe performance problems

5.5 Paged virtual memory

paging is a solution to fragmentation

blocks of a fixed size are used for

memory allocation so that if there is

any free store, it is of the right size.

Memory is divided into page frames

and the user’s program is divided into

pages of the same size.

Typically each page is a reasonably

small size, such as 4 kb. Figure: the

process has still organized its virtual

address space so that it uses a

contiguous block of addresses starting

at 0. All the pages in this example are shown in main memory.

a portion of the disk storage can be used as an extension to main memory and the pages of a

process may be in main memory and/or in this backing store. The OS must manage the two levels

of storage and the transfer of pages between them. It must keep a page table for each process to

record this and other information.

5.5.1 Address translation

figure: virtual to physical address

translation by a MMU. Before a page

can be addressed it must have an entry

set up by the OS in the hardware table

shown. This table, the translation

lookaside buffer (TLB) is searched

associatively as part of every address

reference. The virtual page number is

extracted from the virtual address and

an associative lookup is initiated. If an address reference is made to a page which is present in

main memory but does not have an entry in the TLB: address translation fails. Same if an address

reference is made to a page which is not present in main memory => no match will be found in

the table, and the addressing hardware will raise an exception: page fault

no need for an entire program to be loaded in main memory. Demand paging: When a new page

is addressed, to fetch an instruction or access a data operand, it is brought into main memory on

demand.

5.5.2 copy-on-write paging

often the case that a copy of a writeable data area of one process is required by another.

the data is initially the same but any updates made by a process are not visible to the other

processes that have copies of the data. New page table entries will be set up for these pages for

the process with the copy. The two processes now have separate physical copies of the pages

and separate page table entries for them.

copy-on-write: the process which is to receive the copy of the data is given new page table

entries which point to the original physical pages. Only if either process writes to a page of the

data area, a new copy is actually made.

Three main disadvantages of paging:

* page size is choice made by CPU or OS designer: it may not fit with the size of program data

structures => can lead to internal fragmentation

* there may be no correspondence between page protection settings and app data structures. If

two processes are to share a data structure then they must do so at the level of complete pages

* supporting per-process page tables is likely to require more storage space for the OS data

structures

(paginering: principe ~segmentering, maar blokjes hebben vaste grootte

=> zowel virtueel adres als fysiek geheugen zodanig dat een stukje uit de virtuele adresruimte in

een page frame vh intern geheugen geladen kan worden => geen externe fragmentatie

MAAR gemiddeld gezien is laatste pagina half leeg => interne fragmentering

12 bit offset: 12 bits om binnen een pagina te nummeren; 20 bits over om pagina’s te nummeren

=> ong 1 mioe pagina’s (per proces)

hoe hardware-matig ondersteunen? Nieuwe technologie nodig

page fault: verwijzing naar pagina die nog niet in intern geheugen zit (enkel pagina’s die nodig

zijn w ingeladen)

TLB: soort cache voor reeds gebruikte paginanummers => kan associatief doorzocht worden

(content addressable)

offset blijft ongewijzigd => kan je rechtstreeks overzetten bij virtueel => fysiek geheugen

figuur: moet hardware-matig gebeuren; indien software-matig, wordt voor elke instructie een

programma uitgevoerd, maar zo’n programma is zelf een reeks van instructies die naar adressen

verwijzen => zolang dit niet hardware-matig kan gebeuren, was paginering niet mogelijk

notie van limit-registers speelt geen rol meer omdat alle pagina’s dezelfde grootte hebben (offset

kan nooit groter zijn dan 4 kb)

64 bit: nog 2^52: tabel wordt enkele petabytes groot => past zelfs niet in intern geheugen

LRU: least recently used: kans is groot dat je die dan toch niet snel opnieuw gebruikt => vaak

benaderd met NUR (not used recently) policy (want LRU geeft te veel overhead) => elke keer dat

naar een pagina gerefereerd wordt, verandert de rangschikking van de pagina’s, in welke

volgorde ze gebruikt zijn => hele lijst die je moet bijhouden en constant updaten => NUR is

praktischer)

5.6 Paged segmentation

each segment of a process is divided into pages. This means that the virtual address space of a

process is logically divided into segments but is no longer necessary for each segment to be

contiguous in physical memory.

Figure 1: idea of a simple case of

two segments per process

Figure 2: how a virtual address

might be interpreted when both

segmentation and paging hardware are used.

(combinatie van beide: segmenten pagineren (opnieuw logische structuur in blokken

geen base- en limit-register meer

per processor een register

segmenten niet meer beperkt door grootte vh intern geheugen

door paginering moet niet hele segment in intern geheugen kunnen

nadeel is groot aantal tabellen)

5.7 Memory management data structures

5.7.1 Multi-level page tables

non-segmented 32-bit virtual address space is

mapped to a 32-bit physical address using a twolevel page table and pages of size 4kb. The

physical address of the first-level page table is

given by a page table base register (PTBR). The

first-level mapping is then made using the most

significant 10 bits of the virtual address as an

index into that table, yielding the physical

address of the second-level table to use. The

next 10 bits index that table, yielding the physical frame number which is combined with the last 12

bits. If there are no mappings within a particular second-level table then that table can be removed

and an ‘invalid’ bit set at the top level.

(elk process heeft een hiërachische tabel met niveaus, elk niveau heeft ong 1000 ingangen (10 bits

per niveau) => hier 2 niveaus)

5.8 An example of a MMU

1

2

1 - Basic design of the MIPS R2000 chip. as well as a RISC processor there is also a system control

coprocessor on the chip (TLB: referenties die erin zitten zijn net gebruikt => kans op hit is groot (level

1 cache met 64 ingangen: hoeft maar 256 kb groot te zijn => 64 * 4kb) in moderne architecturen

gebruikt men 2 TLB’s: 1 voor instructies en 1 voor data)

2 – the MMU contains a simple TLB with space for 64 entries. Each non-empty entry in the TLB

indicates a page in virtual memory of a process and its corresponding location in physical memory

the MMU contains 4 registers: enty-hi, entry-lo, index and random. The OS uses them to insert or

replace entries in the TLB; the addressing hardware uses them to perform address translation.

32-bit address is divided into a 20-bit page number and a 12-bit offset. Every time a virtual address is

to be translated, the process number and virtual page number are entered in the entry-hi register.

Matched TLB entry is then read and the physical address of the base of that page is then available in

entry-lo.

arrangement of virtual address space:

the OS occupies half of every process address space. OS

code is in a kernel segment which is cached but not

mapped; device registers and buffers are in a kernel

segment which is neither cached nor mapped. OS data,

such as process page tables and data areas, is both

cached and mapped, as is the user segment.

(overeenkomstige adresruimte

hardwarematig opgedeeld in 4 segmenten

=> als begint met 1: kernel (OS), 0: user

resident: kan niet uitgeswapped worden

zoveel mogelijk dingen proberen te cachen => sneller

beschikbaar – typisch zoveel mogelijk uit user segment

kernel segment 1 kan niet gecached worden

veranderen van registers is onafhankelijk van wat de CPU doet => onzin om te gaan cachen, want

veranderd voortdurend door devices => CPU ziet dan verkeerde waarden in cache

er zijn devices die geen virtueel geheugen

nodig hebben

real-time systemen hebben problemen met

virtueel geheugen (conflicteert vaak) => als je

bv paging gebruikt is response time niet meer

deterministisch en dat is nu net wat het wel

moet zijn bij real time

intern geheugen is gedeeld => bv 100

processen van 4 gig willen allemaal tegelijk het

intern geheugen gebruiken + we gaan naar

processen met groter virtueel geheugen dan 4

gig => kan niet eens helemaal in intern

geheugen;

schijf is bottleneck => er zijn betere swapping devices (hoe groter intern geheugen, hoe minder je

moet swappen – swappen verminderen maar niet vermijden)

sneller geheugen: solid state (SSD), flash (RAM 100 nanosec, schijf 10 millisec, solid state tussen RAM

& schijf ~ 1 millisec