Chapter 4: Support for processes

Concept of process: an active element which causes the modules to be executed dynamically, unit of

resource allocation (process) and unit of scheduling (thread or process)

function of OS is to create the abstraction of a set of concurrent processes.

(programma = stuk tekst, process = actieve/dynamische uitvoering van programmatuur waarbij

resources (processor,…) nodig zijn)

4.1 Use of processes in systems

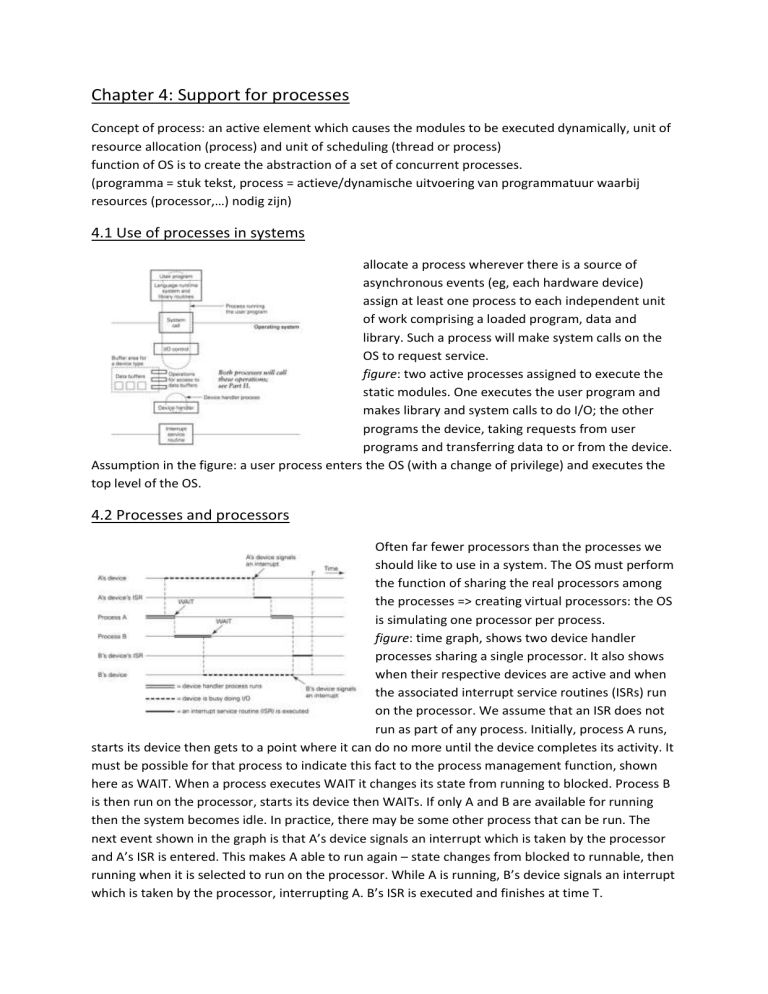

allocate a process wherever there is a source of

asynchronous events (eg, each hardware device)

assign at least one process to each independent unit

of work comprising a loaded program, data and

library. Such a process will make system calls on the

OS to request service.

figure: two active processes assigned to execute the

static modules. One executes the user program and

makes library and system calls to do I/O; the other

programs the device, taking requests from user

programs and transferring data to or from the device.

Assumption in the figure: a user process enters the OS (with a change of privilege) and executes the

top level of the OS.

4.2 Processes and processors

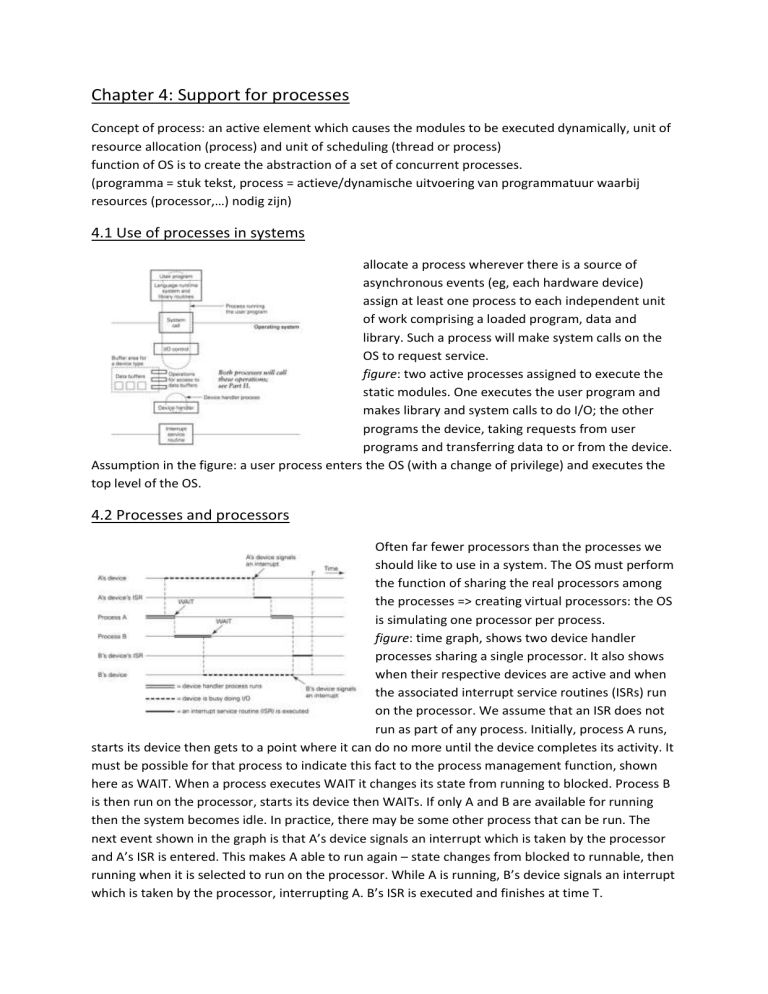

Often far fewer processors than the processes we

should like to use in a system. The OS must perform

the function of sharing the real processors among

the processes => creating virtual processors: the OS

is simulating one processor per process.

figure: time graph, shows two device handler

processes sharing a single processor. It also shows

when their respective devices are active and when

the associated interrupt service routines (ISRs) run

on the processor. We assume that an ISR does not

run as part of any process. Initially, process A runs,

starts its device then gets to a point where it can do no more until the device completes its activity. It

must be possible for that process to indicate this fact to the process management function, shown

here as WAIT. When a process executes WAIT it changes its state from running to blocked. Process B

is then run on the processor, starts its device then WAITs. If only A and B are available for running

then the system becomes idle. In practice, there may be some other process that can be run. The

next event shown in the graph is that A’s device signals an interrupt which is taken by the processor

and A’s ISR is entered. This makes A able to run again – state changes from blocked to runnable, then

running when it is selected to run on the processor. While A is running, B’s device signals an interrupt

which is taken by the processor, interrupting A. B’s ISR is executed and finishes at time T.

non-preemptive scheduling: processes only lose control of the processor voluntarily. Advantage: a

process can be assumed to have tidied up its data structures, and not be hogging some valuable

resource if it is allowed to finish its current task. Disadvantage: slow response to hardware events.

Non-preemptive scheduling is useless if fast response to unpredictable events is required.

(veronderstelling: maar 1 processor, dus A en B kunnen niet gelijk running zijn; WAIT: running-mode

=> blocked-mode; ISR maakt B van blocked terug runnable – non-preemptive scheduling: het

lopende proces gaat verder – ISR is stukje code, geen proces dus A blijft ondertussen running; code

wordt uitgevoerd in context v proces => A & code vormen 1 proces, niet verschillend)

4.3 Process state

figure: running on a processor: RUNNING state; able to

run on a processor: RUNNABLE state; unable to run on a

processor because it is awaiting some event and cannot

proceed until that event arrives: BLOCKED state.

preemptive scheduling: process is forcibly preempted

from its processor

(running => runnable: huidig proces even aan de kant

zetten om ander de kans te geven om te runnen)

4.3.1 Saving process state

contents of any processor registers must be saved

OS keeps a data block, a process descriptor (PD), for each process that it supports. When a process is

preempted or blocks voluntarily, the process state is saved in the process descriptor +other

information like the process’s state (blocked/runnable) and its start address.

information that must be in the process descriptor is that which is needed when the process is not in

main memory. (het proces dat ik onderbreek: toestand van bijhouden om later verder te runnen

vanaf dat punt; zelfs al is een proces blocked, descriptor kan veranderen => gepriviligeerd: alleen OS

kan dit, niet toegankelijk voor user processes)

4.3.2 Context switching

context switching: the process of saving the state of a process and setting up the state of another

the instructions that are executed in performing these operations and the frequency at which

context switching happens are an overhead at the lowest level of a system.

4.4 Synchronizing with the hardware: events and the WAIT operation

1

2

WAIT: allows processes to sync with hardware; wait for one specific event/ for any one event

possible hardware events known at design time => set of events can be encoded as an integer,

representing a bit-map.

1) process descriptor could encode the reason the process is blocked and the events of interest to

this process that have occurred

2) one way of implementing process-event synchronization. Assumption: it is well known which

system processes will wait on which events (eg: dedicated device handler that syncs with a single

device). Figure represents process descriptors as object – most OS: procedural – not object-oriented

– approach is taken. Figure: process level and implementation level for a WAIT for a specific event.

When the process invokes OS.WAIT(AnEvent), control is transferred to the OS method WAIT. The OS

object (not shown) in turn invokes WAIT(AnEvent) on the process descriptor for the current process.

Assuming that the event of interest has not already occurred, the process state is set to blocked and

the event it is waiting for, is recorded in the ‘events awaited by process’ slot. Some other process is

then run. When the event occurs, we assume that a method SIGNAL(anEvent) is invoked on the

process descriptor which causes the process state to be set to runnable and the reason for waiting to

be removed. The process will be selected to run again in due course. => system design is such that a

single, well-known process waits for each event so SIGNAL is invoked on the appropriate process

descriptor.

wake-up waiting: when the process requests to wait for the event, it can continue running and never

needs to enter the blocked state.

4.4.1 Race conditions

a race condition exists whenever the relative timing

of a set of events affects the outcome

The process will wait indefinitely for the event if this

is allowed to happen => WAIT and SIGNAL process

descriptor methods must be implemented as atomic

(event is al opgetreden maar process weet dit niet =>

process blijft en blijft wachten op die event en blijft

dus blocked => WAIT en SIGNAL mogen niet

overlappen!!! (probleem: sync tss processen))

4.4.2 Event and process objects

separate event objects form process descriptors

event object: SIGNAL, WAIT; process objects: BLOCK,

UNBLOCK

when a process executes OS.WAIT, the OS object OS

invokes WAIT on the event object. Process id is recorded in

the event data structure and BLOCK method is invoked on

the relevant process descriptor, causing the representation

of the process state to be changed from running to blocked.

SCHEDULE method could then be called to decide which

process to run next. When an event occurs, SIGNAL method

is invoked on the event object. If one or more processes are

recorded as waiting for the event, one of them can be made runnable by invoking UNBLOCK method

on the relevant process descriptor object. Again, scheduling is invoked after this. If no process is

awaiting the event, its occurrence is recorded as a wake-up waiting in the event data structure.

4.5 The process data structure

OS must handle many processes and will maintain a data structure holding their descriptors. The OS

should be able to choose and run the highest-priority process as quickly as possible. The selection

policy determines which process will be selected and is effected by the scheduling algorithm.

4.6 Scheduling: General approaches

scheduling: selecting a process to run

dispatching: setting up a process state in process registers (eerst scheduling, dan dispatching)

unary scheduling: system is idle, interrupt frees a process

binary scheduling: process is running, interrupt frees another process (or running process wakes up

another one)

general scheduling: when the process that is running terminates or blocks, a general schedule must

be carried out (process stopt en dan tussen alle andere gaan kijken welke voorrang krijgt)

process behaviour and priority

OS processes are put into permanent, static fixed-priority ordering.

figure 1: possible data structure: array or table of PDs

example of how information on processes might be held in a process mgmt. module and shows

system processes handled separately in this way => in fixed-priority order and OS’s scheduler will

search from the top of the table for a runnable system process.

multi-user system: nature of applic processes is unknown to OS and their behaviour will change

during a typical program run => time slice is allocated to process when it begins to run

figure 2: one or more queues of runnable processes may be chained through the user processes in

this table, using the link fields in the PDs. When a process becomes runnable it is added to the end of

the appropriate queue

figure 3: alternative view of high- and low-priority run queue.

When a process becomes runnable after being blocked it is

allocated to high-priority run queue. It if continues to block

before using up its time slice, it will always be scheduled from

the high-priority queue. If a process runs to the end of its time slice, it is moved to the end of the

low-priority queue. Only if high-priority queue is empty, a lowpriority queue is examined.

figure 4: system processes: highest priority, handled in static,

fixed-priority order (not shown)

high-priority queue: for user processes that blocked, waiting

for certain events (like page fault) and are now runnable

medium-priority: user processes that were blocked, waiting for

resources which are less critical for system performance and

are now runnable

low and lowest: for processes that use up their time slice; two queues allow for an allocation of two

priority levels for

processed when they are not doing I/O

(I/O bound processes krijgen prioriteit omdat deze een bottleneck vormen

=> als we compute-bound voorrang geven, gaat deze de CPU monopoliseren, nooit meer vrijgeven

=> runnable nu opgesplitst in high & low priority; hoe meer tijd een process gebruikt, hoe lager

prioriteit (multi-level feedback) vb windows 7 heeft 32 prioriteitsniveaus)

4.7 Scheduling for shared-memory multiprocessors

Processor, on becoming free, should execute code such that it first examines its local run queue and,

if that contains no runnable processes, should take a process from the global run queue(s).

an app should be able to indicate to the OS that it has a number of processes and to give their

relative priorities for scheduling purposes. It is then possible to run them simultaneously on the

processors of a multiprocessor.

need for inter-processor interrupt: example: a process A running on a processor P makes a process

runnable which is running on processor Q. an inter-processor interrupt from P to Q can start off the

required context switch.

one approach to process scheduling for shared-memory multiprocessors is to allocate the process at

the top of the run queue to the first processor that becomes free. This ignores the fact that a process

may have run recently on a particular processor and may have built useful state in both the cache

and the address translation unit. In the case of a page fault, eg, the process should continue on same

processor.

It might be appropriate to allow a process to busy wait on a page fault: keep the process scheduled

and running. The waiting process might execute in a tight loop, or spin, until it is interrupted by the

arrival of the required page. (busy waiting: je blijft wachten op event, maar blijft runnen, ook al doe

je eiglk niets – dus niet in blocked mode; beter als contect switch te duur is)

relationships between groups of processes: if certain processes are designed to work closely together

=> ideal arrangement: schedule them simultaneously on the multiple processors of the

multiprocessor

the counter-argument is that if the processes are sharing data it is better for them to run on the

same processor so that the same cache is used (cache coherency problem)

4.8 Process scheduling to meet real-time requirements

Preemptive scheduling with carefully chosen process priorities may not be sufficient to ensure that a

number of processes meet their timing requirements in a

real-time system.

two kinds of real-time processes: those which must respond

to unpredictable events in a specified time.

figure 1: two processes A and B each with a known

computation time (workA and workB) and a known length of

time in which the work must be completed. The graph shows this as a deadline (D), work time (W)

and slack time (S) for each process.

Figure 2: first graph: possible sequence of events for the two processes. First A’s event occurs and A

is scheduled. Before A’s work is complete, B’s event occurs.

The scheduler now has to decide whether to schedule A or B.

scheduler has the information to compute the deadlines of A

and B and the remaining slack time of A and B.

earliest deadline first policy: process B would be selected. B

then completes before its deadline by the value of its slack

time.

alternative strategy: first schedule the process with the least

remaining slack time, provided that they can both meet their

deadlines. => third graph: least slack first policy for A and B.

fourth graph: event for a third process C occurring.

Periodic schedules

known number of processes

schedule can be determined when system is designed

suitable value for scheduling period must be chosen

each process: priority proportional to frequency (Rate Monotonic schedule)

EDF, LSF can also be used, deadline being taken as the end of the current period and the slack

computed from this value.

Aperiodic processes

guaranteeing response to unpredictable events is more difficult

EDF, LSF

(figuur: je moet voice voorrang kunnen geven,

voice is kritisch

4.8.1 System structure and response to events

some OSs are executed procedurally, ie, OS code is

executed ‘in-process’. When a user process makes a

system call it becomes a system process and enters

the OS. Such systems tend to have very few

dedicated system processes and much of the work

that is done by one process may be on behalf of

others. Example: interrupt is services in the context

of whatever process is running when it occurs. Before returning to user mode, the process executes

system code to determine whether any process is due to be woken up.

the alternative design approach is to have the OS executed by dedicated processes and to request

service from them. (procedureel OS: groot deel vd code v OS wordt uitgevoerd in context van user

processen die call doen naar OS)

4.10 OS structure and placement of processes

figure: major components of a traditional, closed

OS; gives a possible placement of processes to

achieve dynamic execution of the system. Processes

are allocated as follows:

* single process is allocated to each user-level

activity: a user program exec or a command exec

* process is allocated to handle each device

instance. Such a process will wait for and respond to

device interrupts

* some memory mgmt. processes are indicated to

respond to address translation faults, to allocate

main memory to processes and to move processes

into and out of main memory as necessary

(swapping)

* certain modules: no permanent allocation of processes, the code is executed in the context of user

processes, as a result of a system call => state change from user state to system state

* the process mgmt. module cannot itself be implemented as processes

(process mgmt.: scheduler kan zelf geen process zijn => schedulet wnr welk process uitgevoerd

wordt, dus als dat zelf een process zou zijn, wie schedulet dan scheduler? – scheduling is onderdeel

van process mgmt.)

4.11 Multi-threaded process implementation

User threads share address space of the app and al the resources allocated to the app

Some OS allow user threads to be registered with them as schedulable kernel threads. Such OS

support multi-threaded processes.

the context switching overhead is relatively low

some OS do not support multi-threaded processes and each process is defined to have a separate

address space => heavyweight process moded: overhead on a context switch is high. If an OS is to run

on a shared-memory multiprocessor, it is important that multi-threaded processes are supported.

(het zijn eiglk de draden van een process die gescheduled worden (kunnen dus parallel op meerdere

processoren uitgevoerd worden) => voor process moet hele omgeving (context) opgezet worden,

draden hergebruiken die gwn => scheduling & dispatching gaat dus sneller voor draden

oude OS kennen multi-threading niet => ziet geen draden, enkel processen => heavyweight process

model)

4.12 Processes in languages, runtime systems and OS

when a sequential programming language is used,

one OS process is created to run the program (a).

when a concurrent programming language is used,

the programmer may set up a number of concurrent

processes (to be implemented as user threads) (b),

(c). if these user threads may be made known to the

OS as kernel threads, through a system call, we have

the situation shown in (c).

the programs indicated in (a), (b) and (c) will run as

OS processes, each in a separate address space. If

the user threads can be registered with the OS as

kernel threads, they are scheduled independently

and can WAIT for synchronous I/O separately. While one blocks, waiting for I/O, another thread of

the same process can run. This is essential if the concurrent system is to run on a multiprocessor.

(a: ieder programma = 1 proces (geen draden); b: mogelijkheid tot meerdere draden, maar OS ziet dit

niet, ziet dit als 1 proces (uitvoering op 1 processor); c: meerdere draden, OS ziet dat ook zo)

4.13 Process state in language systems and OS

1

2

process also has a language-level state comprising the values of variables which are accessible to the

process at a given time; stored in data areas in the memory allocated to the process.

(1) one simple arrangement is that a process has a code segment, which includes the runtime

support and library code as well as user-level code, and two data segments, one for static data and

the heap and one for the runtime stack

this level of the process state is of no concern to the OS, concern of the runtime system which is

responsible for managing the dynamic execution of a program by a process

(2) the user-level code runs within a framework provided by the runtime system. The runtime system

code manages the storage needed by the user program when it is executed. Note that there is a

single thread of control within which the runtime system and the user level code is executed. When

the runtime system has initialized its data structures etc, it will pass control to the user code; user

code calls into the runtime system for service.

figure: at runtime, some specific sequence of module

entries and procedure calls is followed and the stack

structure reflects this dynamic sequence. The variables

become accessible (addressable) by the process when the

stack frame containing them is set up.

when the process exits from the procedure by executing a

return instructions, the stack frame associated with that

procedure is removed from the stack. The frame pointer is

reset to the stack frame of the calling procedure. The calling

procedure may pick up return parameters from the stack.

Note that all the state associated with the procedure

activation is lost on exit from it; the space on the stack will

be reused if the process calls another procedure from its

current context.

heap: long-lived objects; large objects – reference may be put on stack; storage space must be

reclaimed: garbage collection

(als je objecten lang nodig hebt => heap, niet stack => moet expliciet opgeruimd worden door proces)

4.15 Evolution of concurrency in programming languages

A: file server is to run on a dedicated uniprocessor

machine available to its clients across a network.

it takes requests from clients and can be working

on a number of requests at the same time. Eg, it

may need to wait for the disk to be read on behalf

of the client (figure: static modular structure) (file

server => ≠ users moeten tegelijk vraag kunnen

sturen naar file server => kan ik 1 programma

maken om die ≠ vragen (simultaan) aft e

handelen?)

B: simple OS must control a number of devices,

and a devide subsystem (figure) is to be written in

some appropriate language. A device handler may finish its immediate task and may need to wait for

more data to be input or output. It must not lose track of what it was doing. (kan ik een stuk

software programmeren dat al mijn devices controleert?)

C: computerized control system for a chemical plant which carries out periodic data gathering,

analysis and feedback. The computer system should also respond to alarm signals which are

infrequent and unpredictable, but of very high priority. (ik moet een programma schrijven dat

monitoring en control doet en real-time kan reageren op een alarm)

(D: kan ik 1 programma maken dat parallel ≠ partities v data onderzoekt?)

(moelijk/onmogelijk in klassieke sequentiële taal => geen verschillende stacks => geen mogelijkheid

om dingen concurrent te laten lopen)

A shared-memory multiprocessor is available and a

parallel algorithm is proposed to search for a

particular value in a large amount of stored data.

parallel activities should execute code to search a

partition of the data. A mgmt. activity is needed to

start off the correct number of parallel searches,

allocate partitions of the data to them and be ready

to receive the result and stop the searches when

the result is found.

Only one thread of control, one stack and one heap

when a program that was written in sequential programming language is executed.

4.15.2 Concurrency from a sequential language

if separate activities are implemented within a single program written in a sequential programming

language:

* no assistance from the runtime system or OS for managing them as separate activities

* if one activity must be suspended (bc it cannot proceed until some event occurs) and another

resumed, the user-level code mus manage state saving and restoring

* no possibility of making an immediate response to an event by transfer of control within the userlevel code. After an interrupt, control returns to exactly where it was within the process.

4.15.3 Coroutines

motivation for having a single program with internal coroutines is that data can be shared where

appropriate but private data is also supported: each coroutine has its own stack (one thread, one

heap, separate stack per coroutine)

figure: when a coroutine activation is created, the

name of the associated code module, the start address

and the space required for the stack are specified. A

stack is initialized and a control block is set up for the

coroutine activation which holds the stack pointer and

the start address. Kill (co-id) frees the space occupied

bu the activation. The coroutine scheme must specify

who can execute kill.

call(co-id): pass control to the activation co-id at the

address specified in its control block

suspend: pass control back to the parent of this child on the active list

resume(co-id): remove the executor of resume from the active list, pass control to the activation coid and add it to the active list

(coroutines bestaan niet in java

iedere coroutine heeft eigen stack en kan dus eigen toestand bewaren => kan ook stoppen (suspend)

en opnieuw aanvangen (resume) waar die gestopt was

C & D kunnen nog altijd niet want real-time responses zijn hier niet mogelijk © en er kunnen geen 4

dingen op 4 ≠ processoren uitvoeren => coroutines kunnen niet parallel gescheduled worden (D) )

figure: coroutine associated with each device and a polling loop in which the coroutines associated

with the devices are called in turn and the devices are polled from within the coroutines.

such a scheme could be used with device interrupts disabled. In this case, data could be lost if a

device was not polled often enough and a second data item arrived before the first had been

detected and transferred from the interface.

each subprogram is now executed by a separate application process, or user thread, as defined by

the runtime system. Each user thread has its own stack, but control is managed by an outside agency,

the runtime system, and is not programmed explicitly within the subprograms.

the runtime system’s create routine would include an OS call to create a thread, its wait routine

would include a system call to block the thread and its signal routine would include a system call to

unblock the thread. Each thread may then make OS calls that block without affecting the others.

(multi-thread puur als user threads (geval B) verschil met coroutines is dat scheduling door systeem

gedaan w, ik hoef dit niet meer te programmeren;

als systeem 1 draad blokkeert, blokkeert heel het

process (dus alle draden); WAIT: runtime system

gaat nieuwe thread schedulen)

for A each client’s request is handled by a user

thread which executes the file service code. In B

each device handler is a user thread, as shown in the

figure. The way in which the threads might be used

is as discussed above for coroutines.

(Language runtime system doet scheduling, wait

roep LRS op om nieuwe thread te schedulen)

(2) OS schedulet threads; niet meer heel het proces blokkeert als 1 thread blokkeert

real-time systeem kan men nu wel implementeren en ook multi-core nu

=> brengt meer overhead-kosten met zich mee; elke context-switch gaat gepaard met system call

user threads u1 and u2 may run in kernel thread k1;

user thread u3 maps directly onto kernel thread k2;

user threads u4 to u7 may run in either of kernel

threads k3 or k4.

(verhouding is niet 1 op 1; k3 en k4 => 4 draden

moeten met elkaar communiceren voor 2 kernel

threads: vrij complex: 1/1 verhouding veel simpeler

- als op kernel thread niveau evenveel draden zijn)

Windows gebruikt eigen thread library

multi-threading boven OS, op taalniveau

Programma begint altijd met uitvoering van

‘main’ => daar begint draad van uitvoering

main = 1 draad, creëert zelf a en b, 2 nieuwe

draden (dus nu 3 draden)

a.start() => roept methode ‘run’ aan

a.sleep(100) => blokkeer 100 millisec

‘this’ verwijst naar ‘deze draad’

.join() => main wacht tot draden klaar zijn

2 draden worden los van elkaar gescheduled =>

a en b concurreren met elkaar, dus

printresultaat is elke keer anders => multithreaded op multi-core is niet deterministisch