Chapter 10: Low-level synchronization: implementation

10.1 Process synchronization compared with event signal and wait

Process synchronization with hardware events

=> typically arrival of an interrupt signal

=> all possible events can be specified at design time

=> single driver process synchronizes with one hardware event

└> no simultaneous WAITs by processes or SIGNALs by the device

only race condition between WAIT (driver) and SIGNAL (ISR)

no naming problem

General inter-process synchronization

=> a particular process can WAIT for a SIGNAL from any process

=> many processes may WAIT for a shared resource (data structure)

└> synchronize their accesses; wait for the data structure to be free if it is in use

=> a process may have to SIGNAL several others which are not known

└> finished access to shared data => has to signal to other processes that might be

waiting for it to be free

10.2 Mutual exclusion

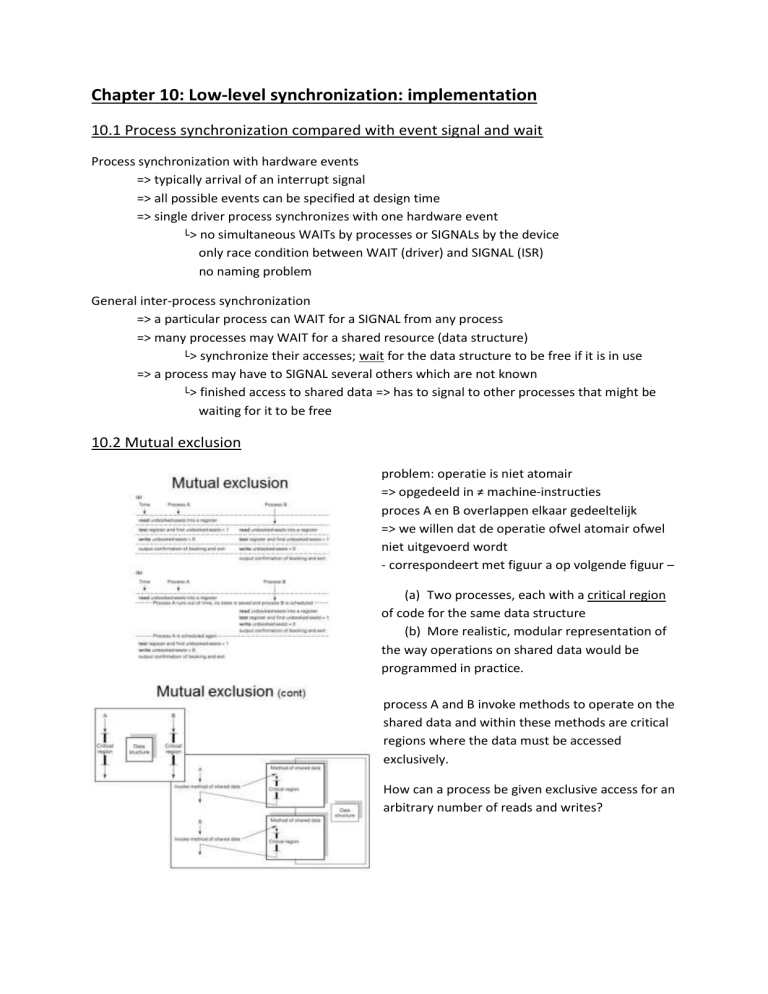

problem: operatie is niet atomair

=> opgedeeld in ≠ machine-instructies

proces A en B overlappen elkaar gedeeltelijk

=> we willen dat de operatie ofwel atomair ofwel

niet uitgevoerd wordt

- correspondeert met figuur a op volgende figuur –

(a) Two processes, each with a critical region

of code for the same data structure

(b) More realistic, modular representation of

the way operations on shared data would be

programmed in practice.

process A and B invoke methods to operate on the

shared data and within these methods are critical

regions where the data must be accessed

exclusively.

How can a process be given exclusive access for an

arbitrary number of reads and writes?

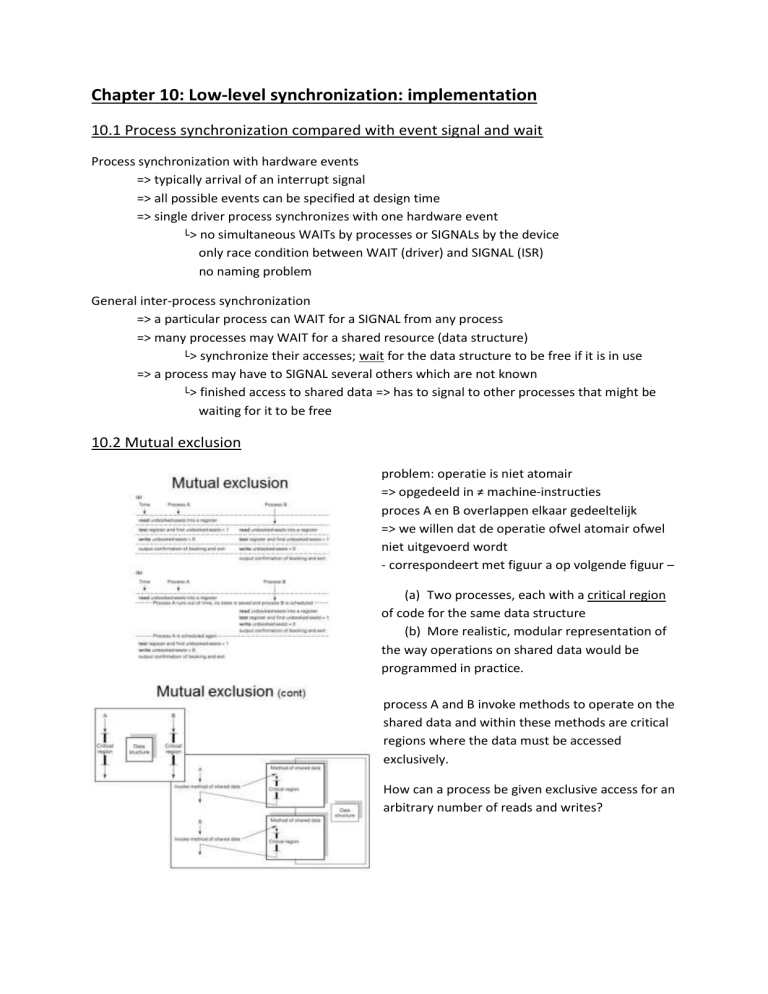

boolean indicates whether data structure is free or

busy

two processes will test the variable before

entering critical regions and will only enter if

region is free

=> region = vrij => zet op bezet en begin met

kritische regio, wanneer klaar, zet terug op vrij

(denk terug aan bounded buffer: producer &

consumer moeten zelfde test uitvoeren)

Geen oplossing want we voegen nieuwe

kritische regio toe -> boolean wordt op ≠ plaatsen

getest en geüpdatet en deze instructies zijn niet

atomair

hoe meer processoren, hoe meer kans dat

processen tegelijk gescheduled worden en die

kritische regio binnengaan

(a) scheme in operation for two processes running

on a multiprocessor

(b) Two processes preemptively scheduled on a

uniprocessor

10.3 Hardware support for mutual exclusion

hardware test-and-set instruction

=> atomic instruction for conditional modification of memory location

TAS BOOLEAN if Boolean indicates that region is free then set it to indicate busy and skip the next

instruction else execute next instruction (=jump back to retry TAS)

If the boolean was busy, the next instruction can simply jump back to try the test and set again

=> this is called busy waiting

=> manipuleert boolean op atomaire manier

waarom probleem dat TAS complexe instructie is?

figuur: a boolean and a TAS instruction may also

be used to implement synchronization, but only

between to processes. A Boolean, to be used as a

synchronization flag, is initialized to busy. Process

A executes TAS when it needs to synchronize with

process B. process B sets the flag to free. There

must only be one possible signaler to set the flag

to free since only one signal is recorded.

verbieden van interrupts werkt enkel op

uniprocessor

multiprocessors:

if a composite instruction is used in a

multiprocessor it is preferable that the

instruction is such that processes read in the

period when they are waiting to enter a

critical region and write only once on entry.

This is the case in a TAS instruction. Each

processor is likely to have a hardwarecontrolled cache; a read can take place from the cache alone whereas any value written to data in

the cache must be written through to main memory so that all processors can see the up-to-date

value. There would be severe performance penalties if several processes were busy waiting on a flag

and the busy waiting involved a write to memory.

The simplest instruction for supporting mutual

exclusion is a read-and-clear operation. After the

RAC instruction is executed, the destination

register contains the value of the flag before it

was cleared. If the destination register contains

zero, the data structure was already busy, in use

by some other process, but the RAC instruction

has done no harm. The executing process must

not access the shared data and must test the flag

again. If the destination register contains a value

other than zero the data was free and the

executing process has claimed the shared data by clearing the flag. (read-and-clear: terwijl je de

waarde in een register leest, overschrijf je ze ook; wat ook de waarde is, je zet op bezet, dan pas

controleren/testen wat de waarde ervoor was => ervoor vrij: ik mag kritische region binnen en het

staat ondertussen al op bezet; ervoor bezet: geen probleem want nieuwe waarde is zelfde als oude –

blijven proberen. Read-and-clear = kritische instructie => atomair; voordat RAC beschikbaar was in

RISC: softwarematig geregeld)

CAS: compare-and-swap compares the value of a flag with some value ‘old’ and, if they are equal,

atomically sets flag to some value ‘new’. This instruction requires both a memory read and write:

typical of a CISC.

In summary, shared data can be accessed under mutual exclusion by:

* forbidding interrupts on a uniprocessor

* using a hardware-provided composite instruction

10.3.1 Mutual exclusion without hardware support

‘out-cr’ => zit uit kritische region

processen zijn aan de beurt volgend volgens volgorde van cirkel (strict turntaking, volgorde ligt vast)

=> kan dus ook een process zijn dat er net is ingekomen (geen FIFO)

iets gelijkaardigs aan read-and-clear in java => atomic boolean (boolean wordt atomisch uitgevoerd)

=> CAS (compare-and-set): ik lees boolean en zet tegelijk nieuwe waarde (true)

while(!(busy.compareandset(false, true))) => zolang tussen haakjes = false, blijft while-lus uitgevoerd

atomic boolean zou oplossing zijn indien slechts 1 producer en 1 consumer => dan is het gewoon een

sync tussen 2 processen; maar we hebben nu 3 producers en 3 consumers. Waarom nu dan wel nog

probleem? => meerdere producers zitten te wachten tot er weer plaats is in buffer – atomic boolean

zorgt ervoor dat ze niet tegelijk verder kunnen, maar te weinig ruimte in buffer => conditie (is er

ruimte?) is losgekoppeld van kritische regio

10.4 Semaphores

The attributes of a semaphore are typically an integer and a queue.

we can envisage semaphores as a class

located in the OS and also in the language

runtime system.

(semafoor: samengesteld: waarde en

queue; semwait: als waarde = 0 dan kan

ik er niets meer aftrekken => queue

(running => blocked)

conditie testen en tegelijk exclusieve

toegang geven)

10.5.1 Mutual exclusion

a semaphore initialized to 1 may be used to provide exclusive access to a shared resource such as a

data structure.

figure1: one possible time sequence for 3 processes A,

B, C, which access a shared resource.

the resource is protected by a semaphore called lock,

initialized to 1. A first executes lock.semwait() and

enters its critical code which accesses the shared

resource. While A is in its critical regio, first B then C

attempt to enter their critical regions for the same

resource by executing lock.semwait(). The figure shows

the states of the semaphore as these events occur. A

then leaves its critical region by executing

lock.semsignal(), B can then proceed into its critical

code and leave it by lock.semsignal(), allowing C to

execute its

critical code. In general, A, B and C can execute concurrently.

Their critical regions with respect to the resource they share

have been serialized so that only one process accesses the

resource at once. (wederzijse uitsluiting; -------- = blocked)

figure2: processes should be able to sync their activities. When

A reaches a certain point, it should not proceed until B has

performed some task. This synchronization can be achieved by

using a semaphore initialized to zero on which A should

semwait() at the sync point and which B should semsignal().

Figure shows 2 possible situations that can arise when a

process must wait for another to finish some task. (a) A

reaches the point before B signals it has finished the task.

(b) what would happen if A arrives at its waiting point after B

has signalled that the task is finished. One process (A) only executes semwait(), while the other

executes semsignal(). Only one process may semwait() on the semaphore, while any number may

semsignal(). (blocking semaphore – semafoor begint op nul; proces moet alleen semafoor kennen,

niet de andere processen)

10.5.3 Multiple instances of a resource

we want to limit the number of simultaneous

accesses.

every time a process Pi requests access to the

resource, and the value of its asociated semaphore >

zero, the value is decremented by 1. When the value

of the semaphore reaches zero it is an indication

that subsequent attempts to access the resource

must be blocked, and the process is queued.

when process releases resource, a check is made to

see whether there are any queued processes. If not,

the value of the semaphore is incremented by 1; otherwise one of the queued processes is given

access to the resource. The semwait() operation causes the processes to queue. Whenever a process

releases the resource, it executes an exit protocol consisting of semsignal(). A process requests a

resource instance by executing semwait() on the associated semaphore. When all the instances have

been allocated, any further requesting processes will be blocked when they execute semwait().

When a process has finished using the resource allocated to it, it executes semsignal() on the

associated semaphore.

10.6 Implementation of semaphore operations

Possibility of concurrent invocation of semaphore operations

will certainly happen on multiprocessor

10.6.1 Concurrency in the semaphore implementation

busy waiting may be acceptable on a multiprocessor for short periods of time

a process’s state can be changed from running to blocked by the OS if the resource is busy when it

executes semwait(). State of some process may be changed from blocked to runnable when the

semaphore is signalled. the implementation of the operations semwait() and semsignal() on

semaphores therefore may involve both changing the value of the semaphore and changing the

semaphore queue and process state. These changes must be made within an atomic operation to

avoid inconsistency in the system state; all must be carried out or none should be.

arbitrary numbers of semwait() and semsignal()

operations on the same semaphore could occur

at the same time. (als OS semafoor nodig heeft,

moet dit echt in laagste level terechtkomen)

figure: a boolean can be associated with each

semaphore. Processes then busy wait on the

boolean if another process is executing semwait()

or semsignal() on the same semaphore.

10.6.2 Scheduling the semwait queue, priority inversion and inheritance

process priority could be used to order the

semaphore queue. It would be necessary

for the process priority to be made known

to the semwait() routine. The priority

inversion problem can be solved by

allowing a temporary increase in priority to

a process while it is holding a semaphore.

Its priority should become that of the

highest-priority waiting process => priority

inheritance

10.6.3 Location of IPC implementation and process (thread) mgmt

Distinguish between processes that are created as user threads and managed only by the user-level

runtime system and the user threads that the runtime system makes known to the OS as kernel

threads.

figure 2 and 3 illustrate the location of IPC and process (thread) mgmt in the two cases.

we are concerned with the sync of user threads over user-level data structures. A user thread which

requires to access a shared resource, or needs to sync with another user thread, can call semsignal()

and semwait() in the semaphore class in the runtime system. If the semaphore value indicates that

the shared resource is free the user thread executing semwait() can continue; there is no call to block

the thread. If the resource is busy then the thread must be noted as waiting for the resource in IPC

mgmt, then blocked, and another thread must be scheduled. Figure 2: blocking and scheduling is

done in the user-level runtime system and the OS is not involved. The single app process that the OS

sees has remained runnable throughout the switches from user thread, to IPC, to process mgmt, to

user thread. Figure 3: the OS must be called to block the kernel thread corresponding to the blocked

user thread. When a user thread executes semsignal(), if there is no waiting thread, the semaphore

value is incremented and there is no call to process mgmt. if processes are waiting on the

semaphore, one is selected and removed from the semaphore queue and a call is made to unblock it.

Figure2: call is to user-level process mgmt, figure 3: call is to OS; in both cases the signalled thread’s

status is changed from blocked to runnable.